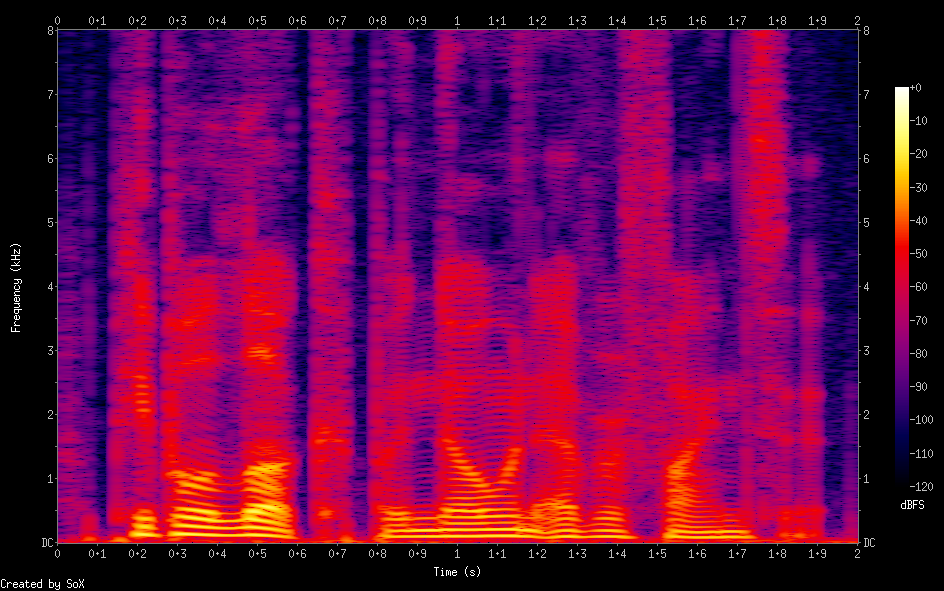

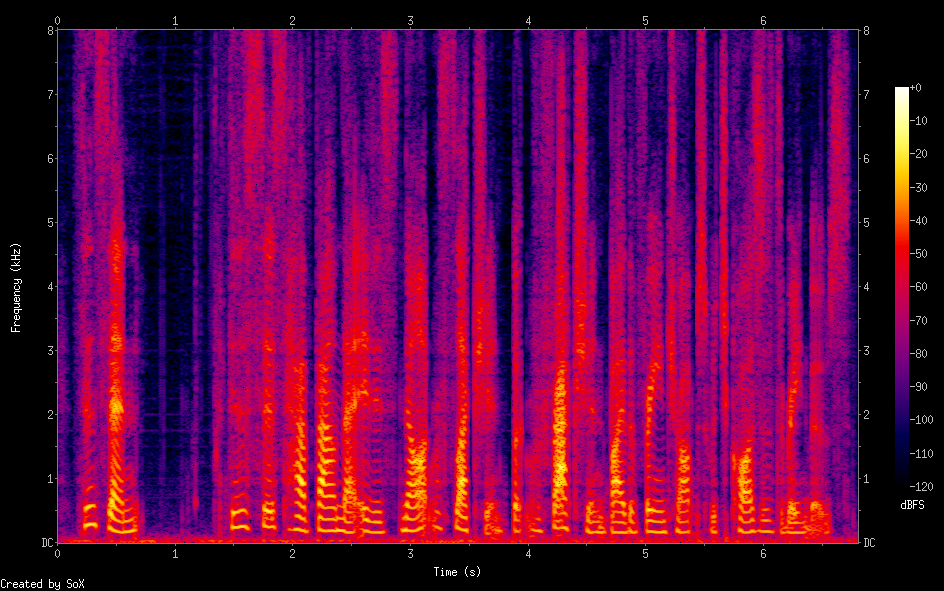

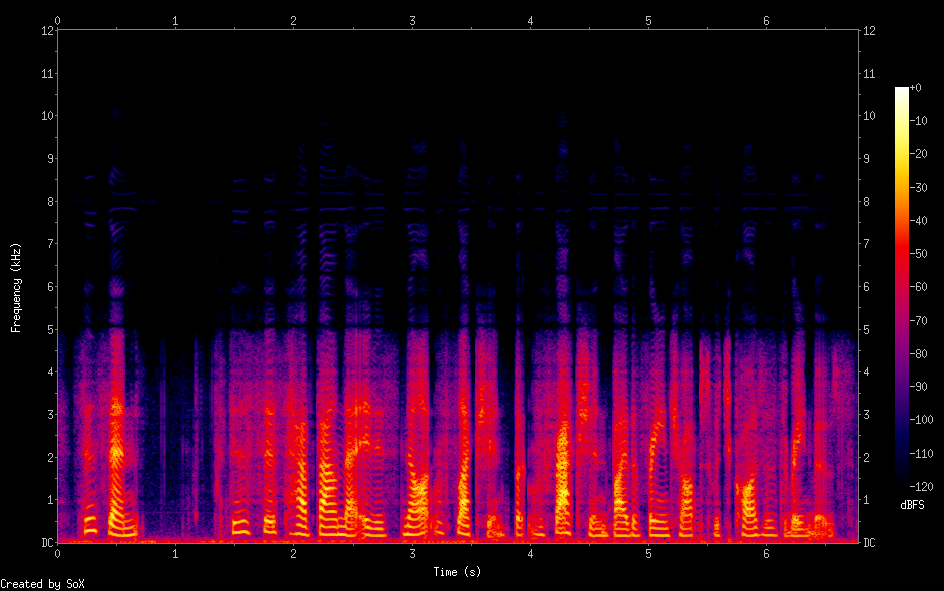

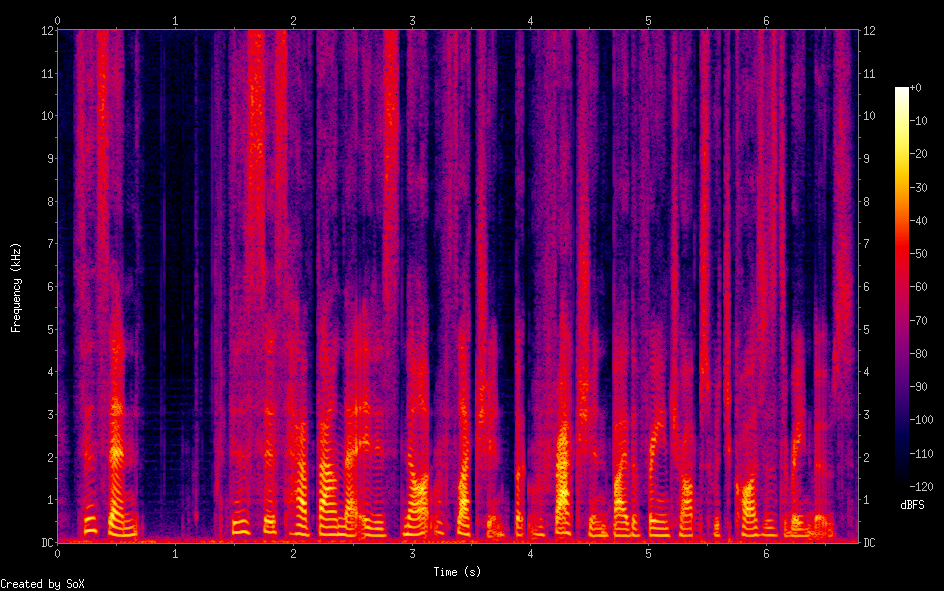

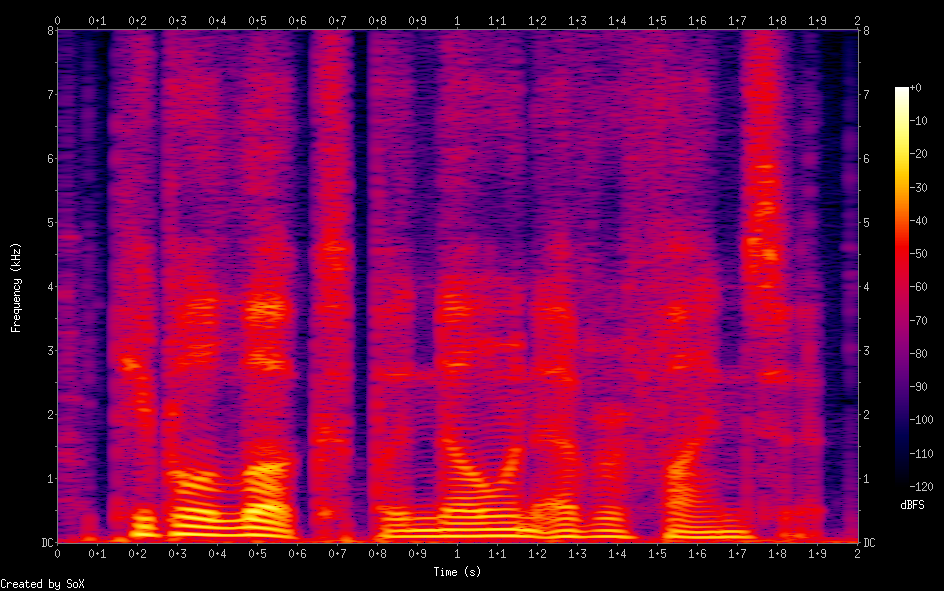

Section Ⅰ: Examples for samples upsampled from 4kHz to 16kHz.

The model is trained on the first 100 speakers of the VCTK dataset. The following samples are generated from the remaining 8 speakers.

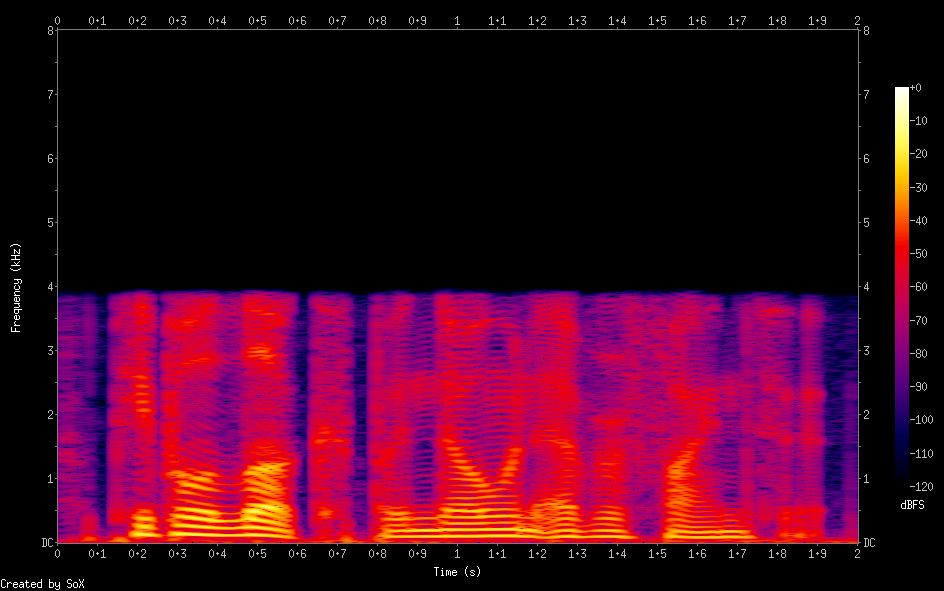

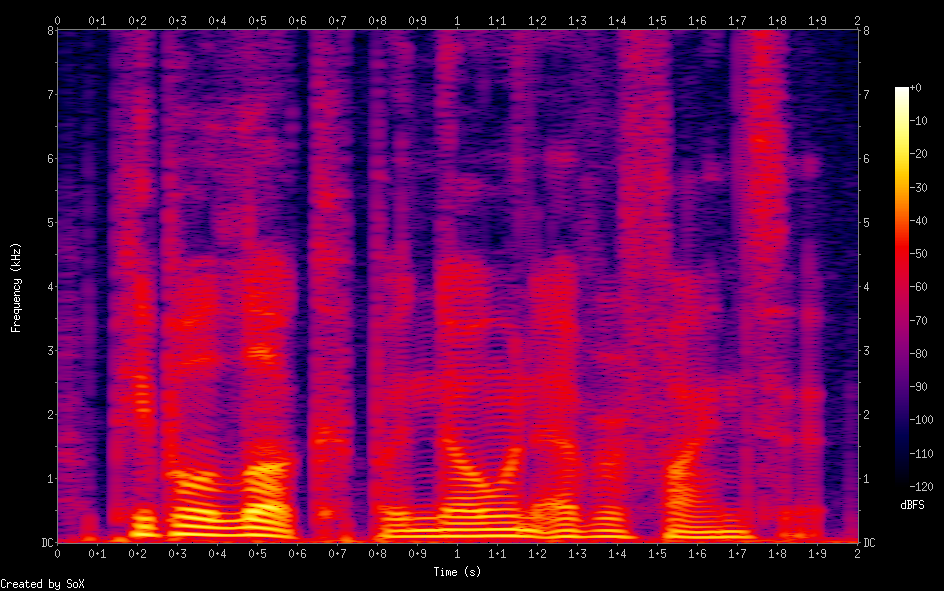

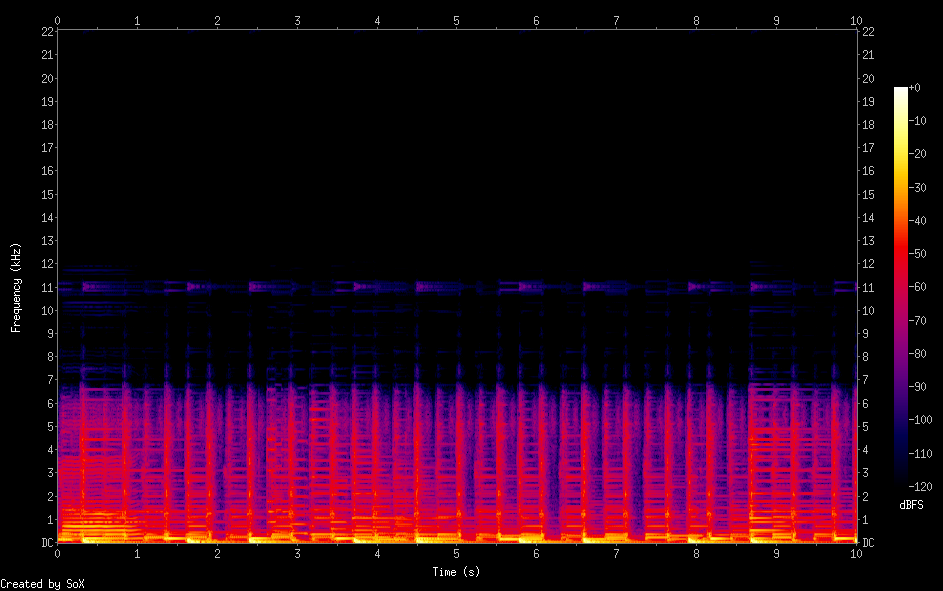

| Original low resolution (4 kHz -> 16 kHz) |

Original high resolution (16 kHz) | Sinc (16 kHz) | TFiLM (16 kHz) | SEANet (16 kHz) | Ours (16 kHz) | |

|---|---|---|---|---|---|---|

| Audio | ||||||

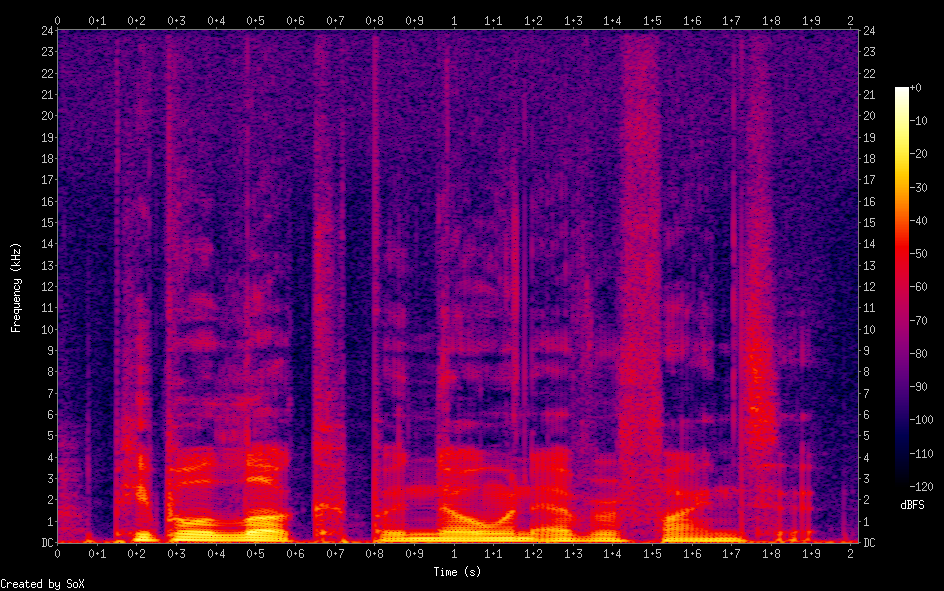

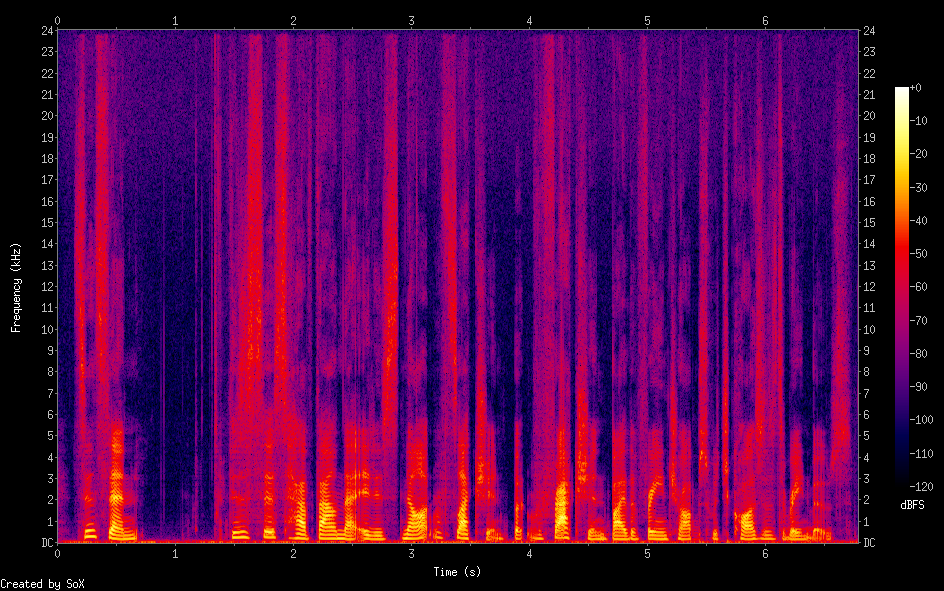

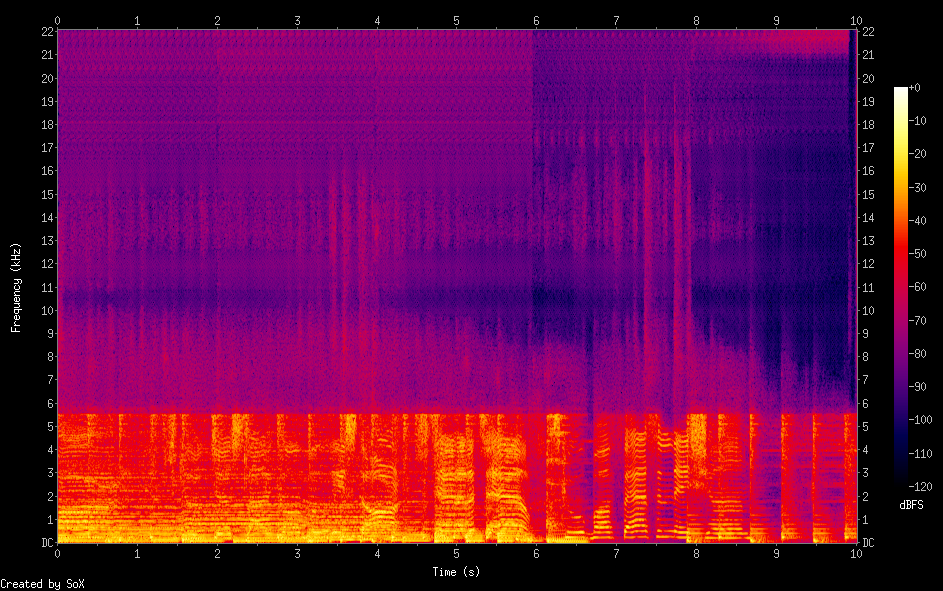

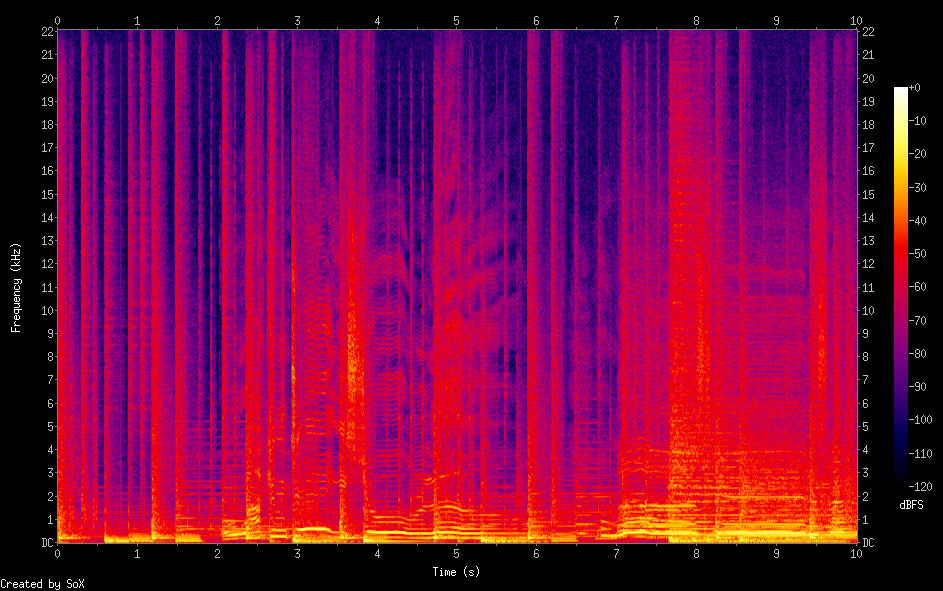

| LinearSpectrogram |  |

|

|

|

|

|

| Audio | ||||||

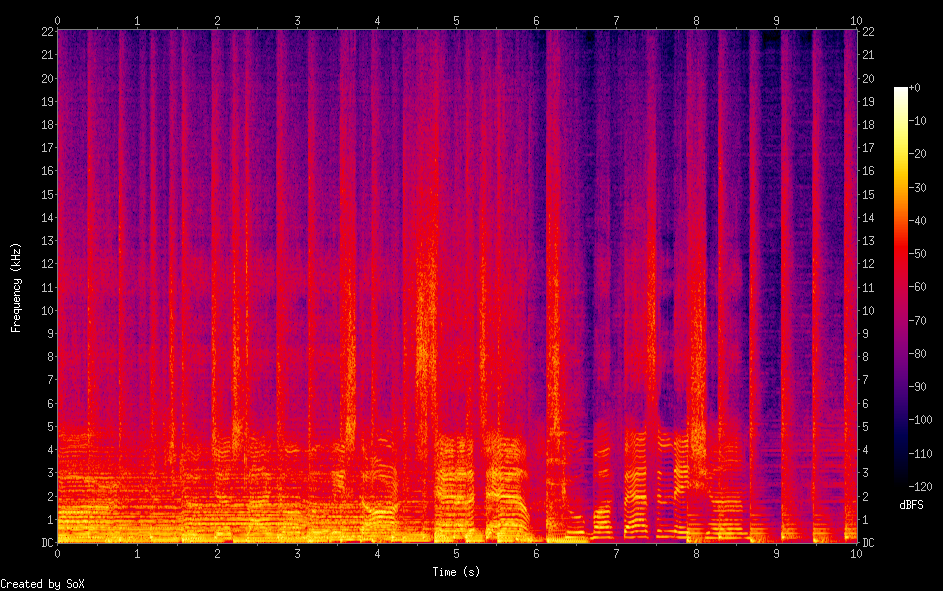

| LinearSpectrogram |  |

|

|

|

|

|

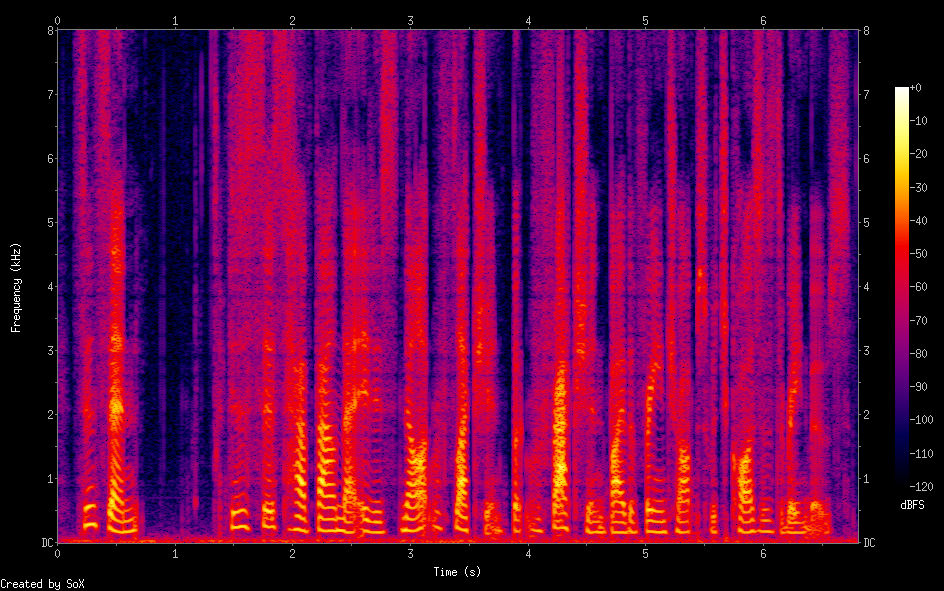

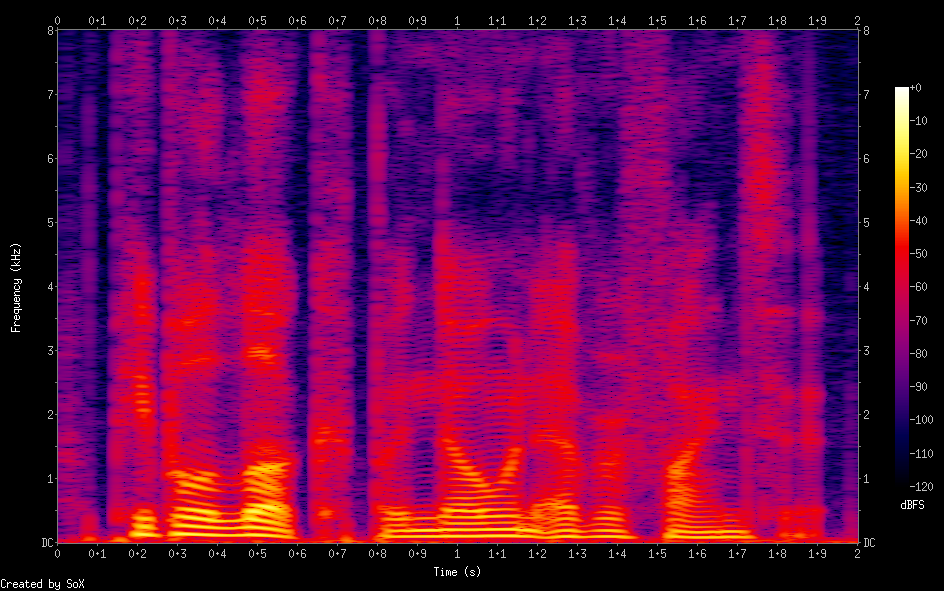

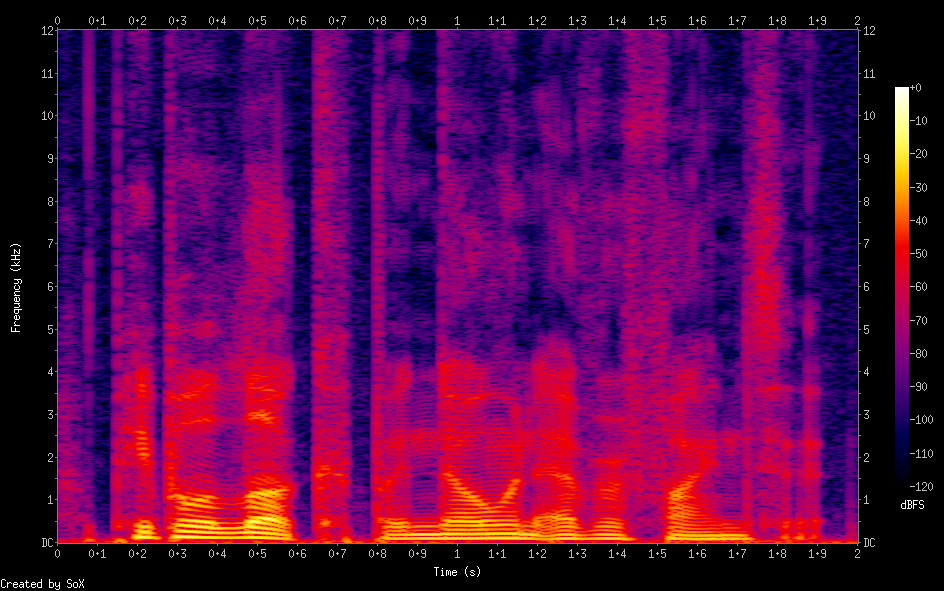

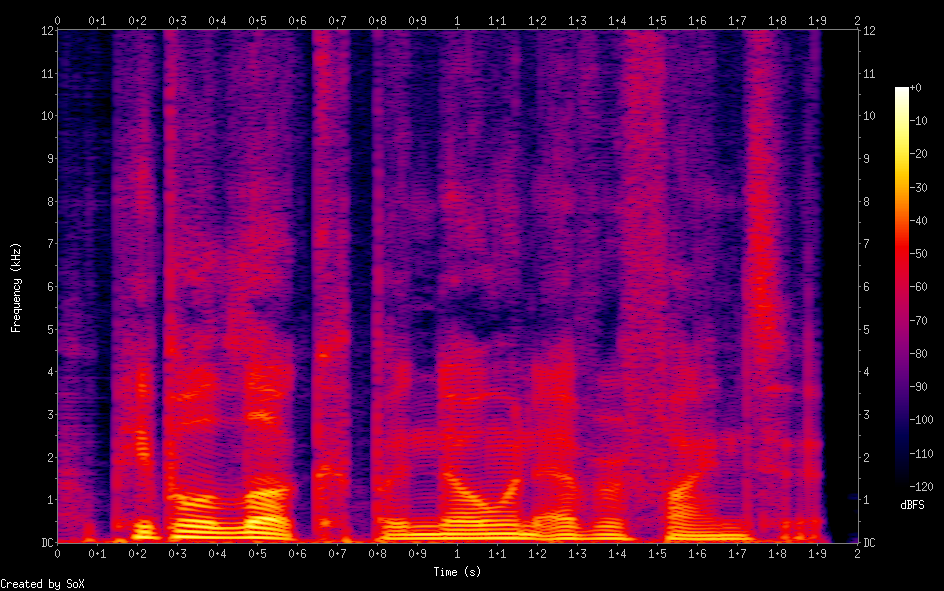

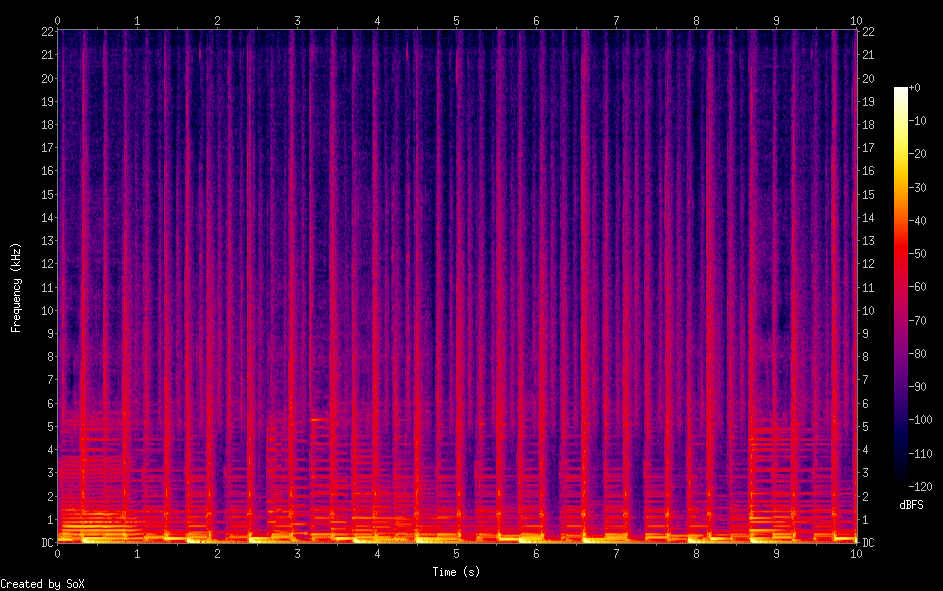

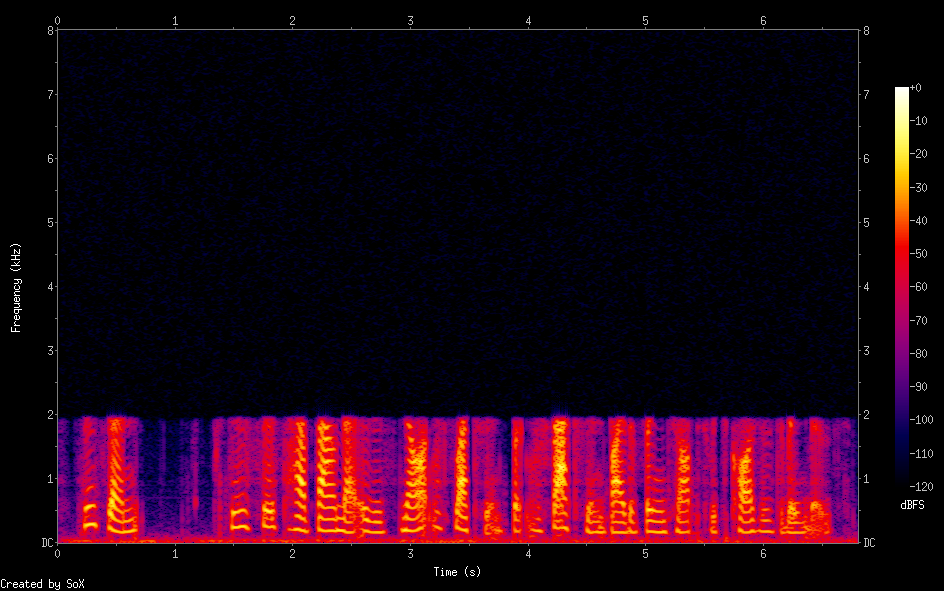

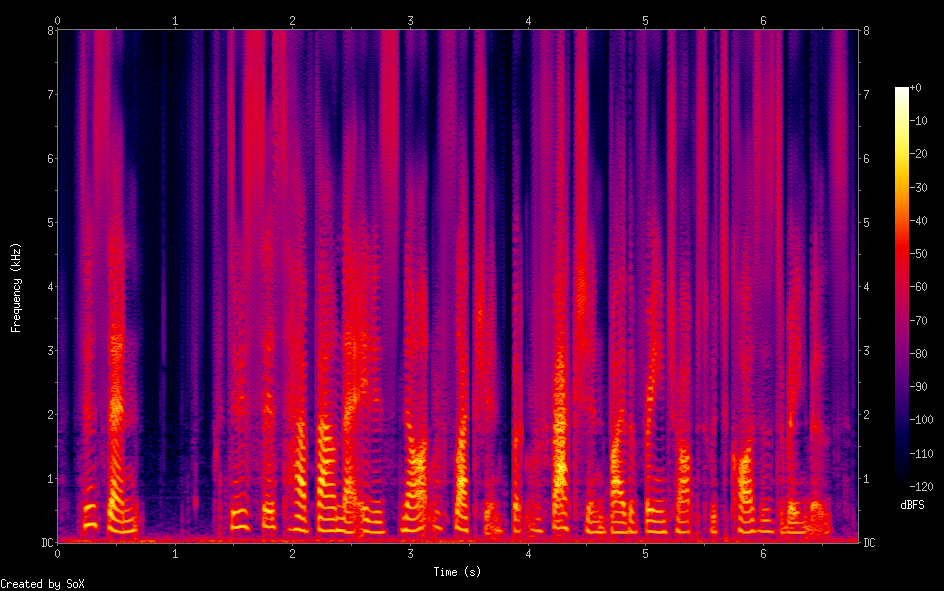

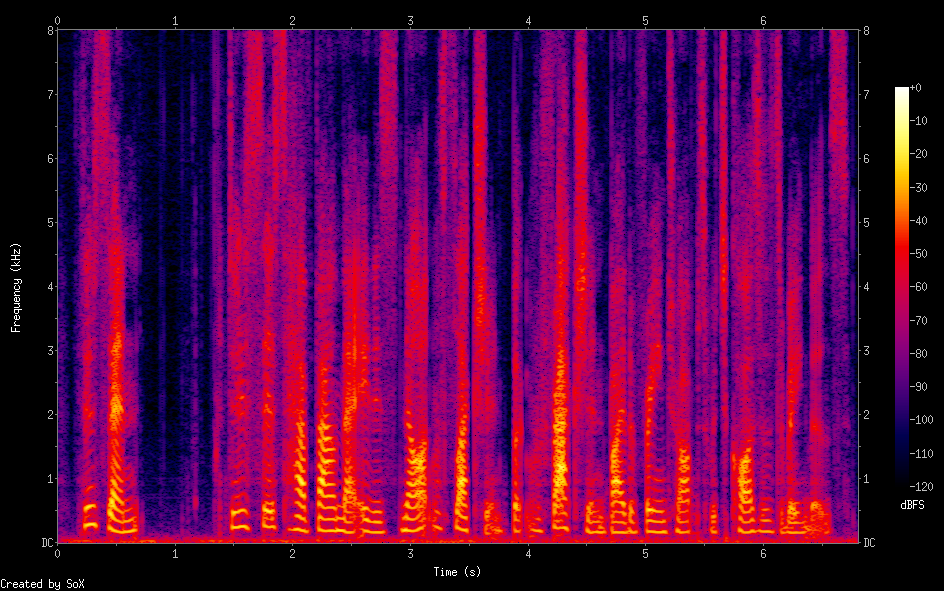

Section Ⅱ: Examples for samples upsampled from 8kHz to 16kHz.

The model is trained on the first 100 speakers of the VCTK dataset. The following samples are generated from the remaining 8 speakers.

| Original low resolution (4 kHz -> 16 kHz) |

Original high resolution (16 kHz) | Sinc (16 kHz) | TFiLM (16 kHz) | SEANet (16 kHz) | Ours (16 kHz) | |

|---|---|---|---|---|---|---|

| Audio | ||||||

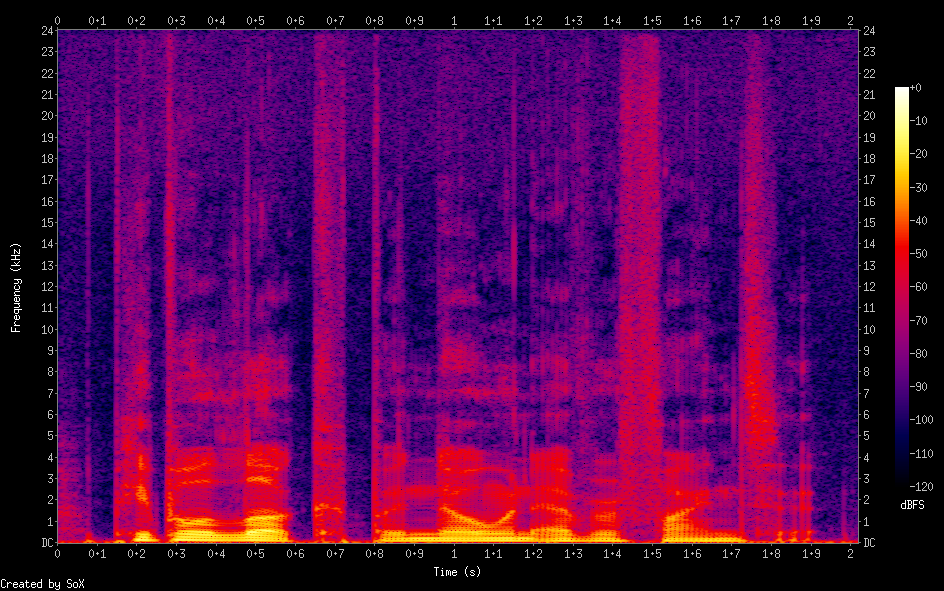

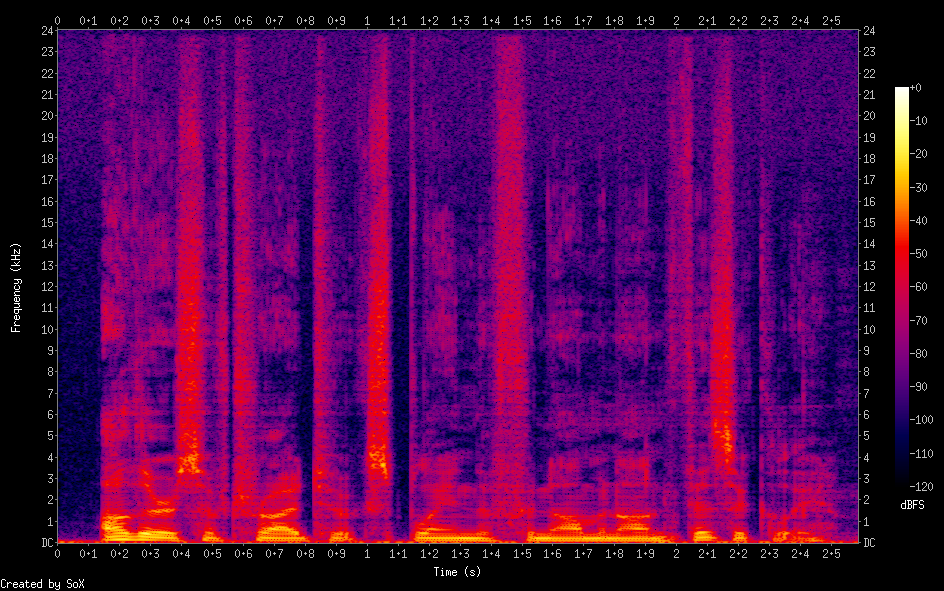

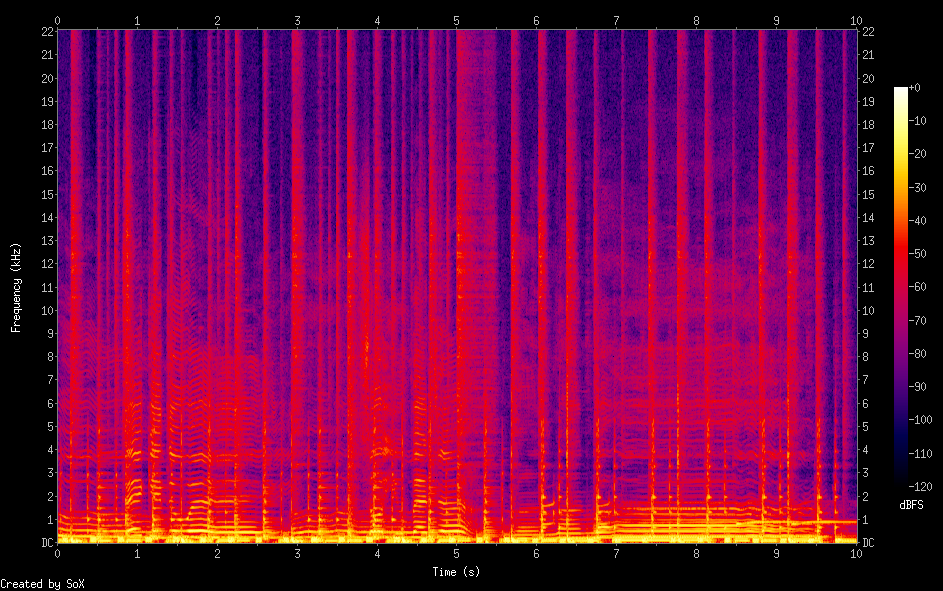

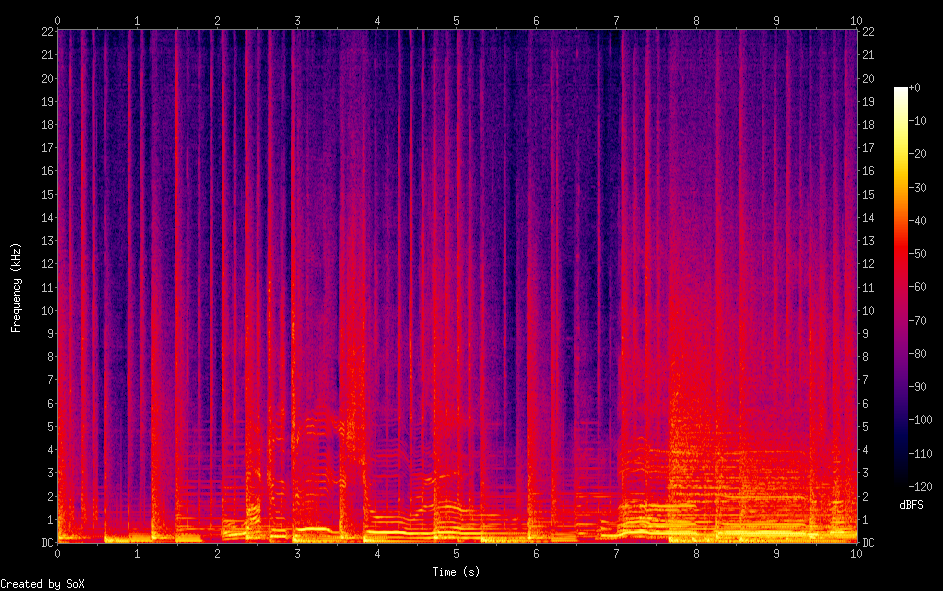

| LinearSpectrogram |  |

|

|

|

|

|

| Audio | ||||||

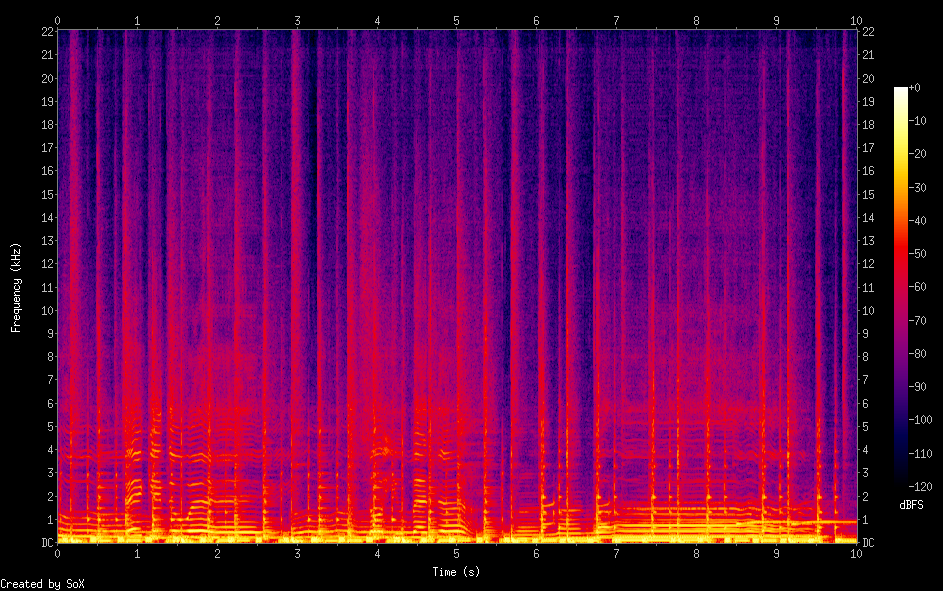

| LinearSpectrogram |  |

|

|

|

|

|

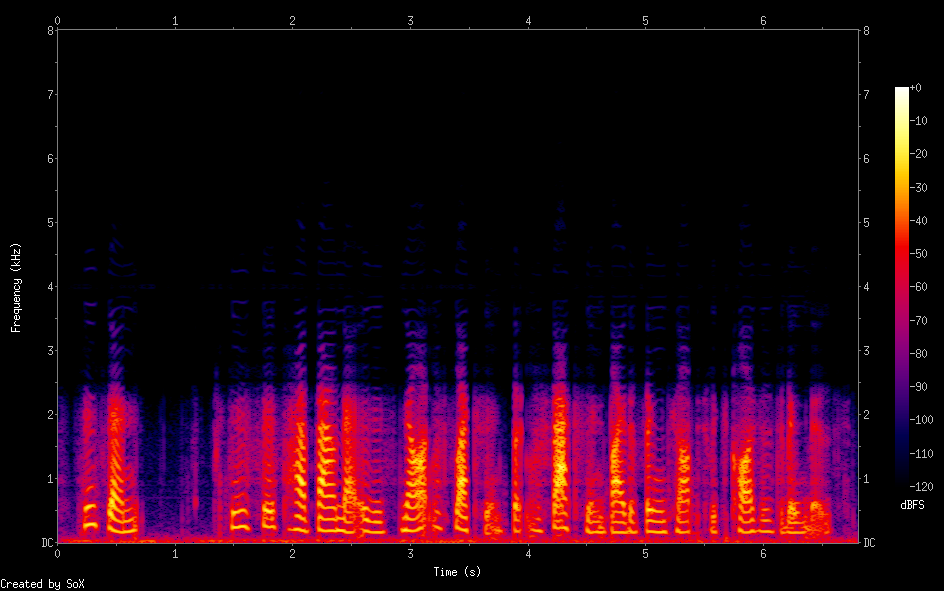

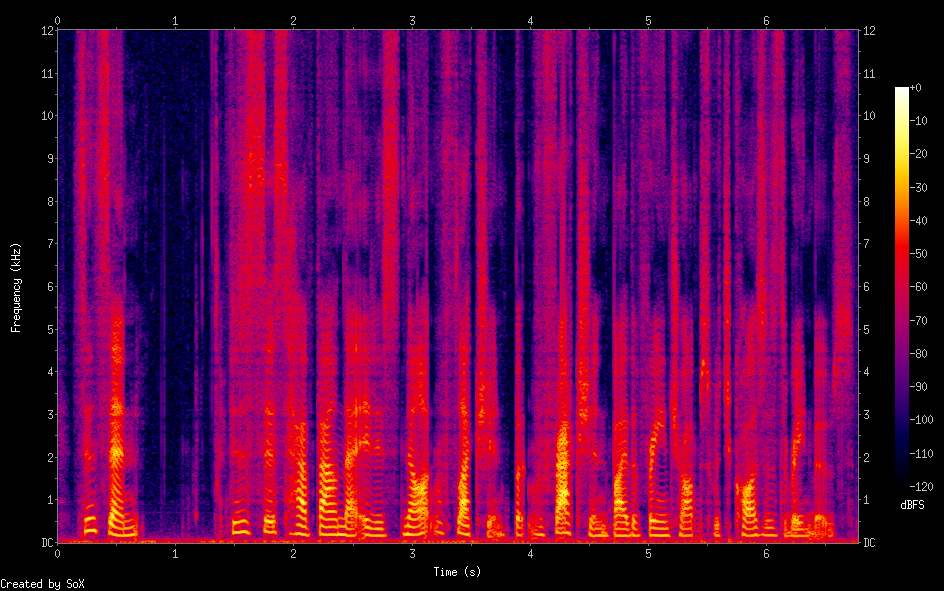

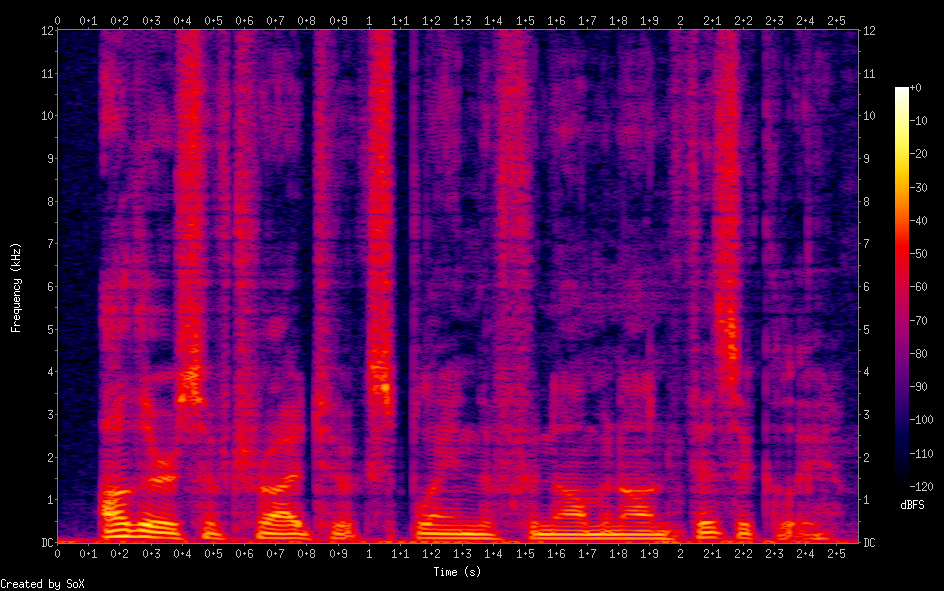

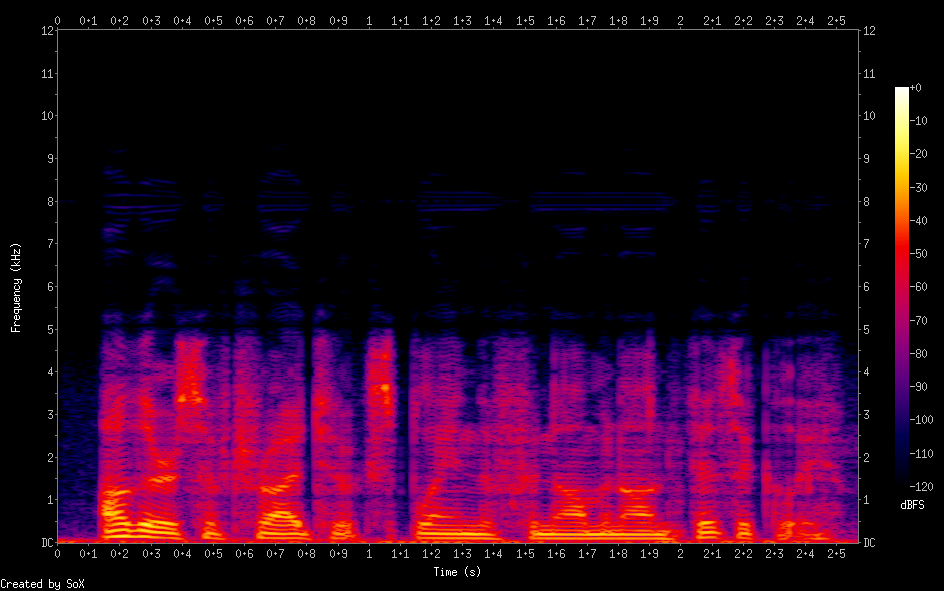

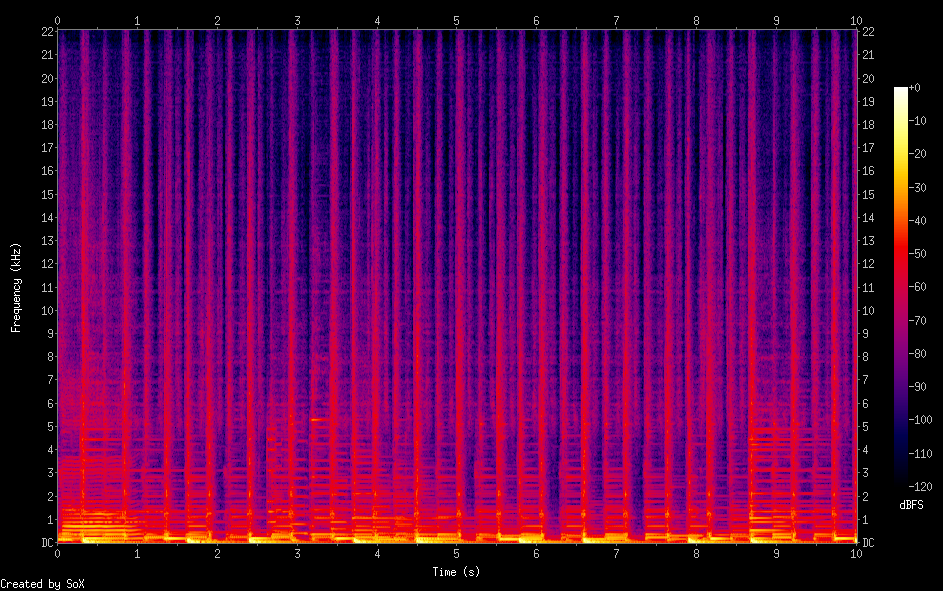

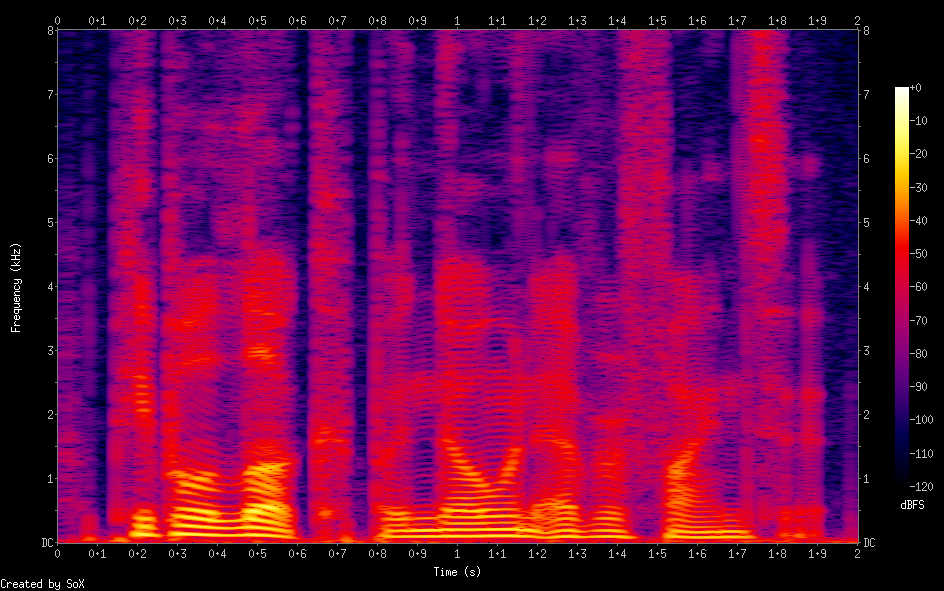

Section Ⅲ: Examples for samples upsampled from 8kHz to 24kHz.

The model is trained on the first 100 speakers of the VCTK dataset. The following samples are generated from the remaining 8 speakers.

| Original low resolution (8 kHz -> 24 kHz) |

Original high resolution (24 kHz) | Sinc (24 kHz) | SEANet (24 kHz) | Ours, hl=256 (24 kHz) | Ours, hl=128 (24 kHz) | Ours, hl=64 (24 kHz) | |

|---|---|---|---|---|---|---|---|

| Audio | |||||||

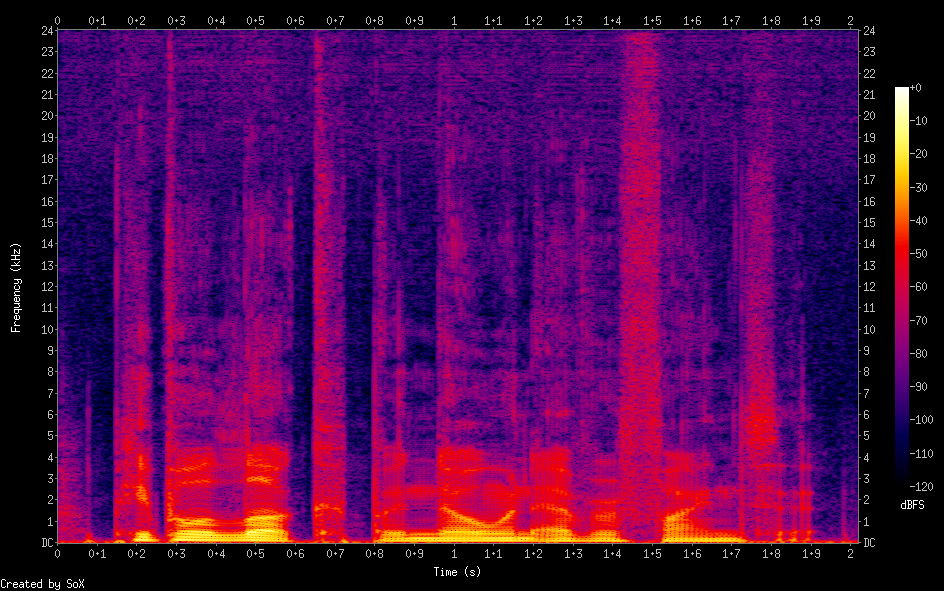

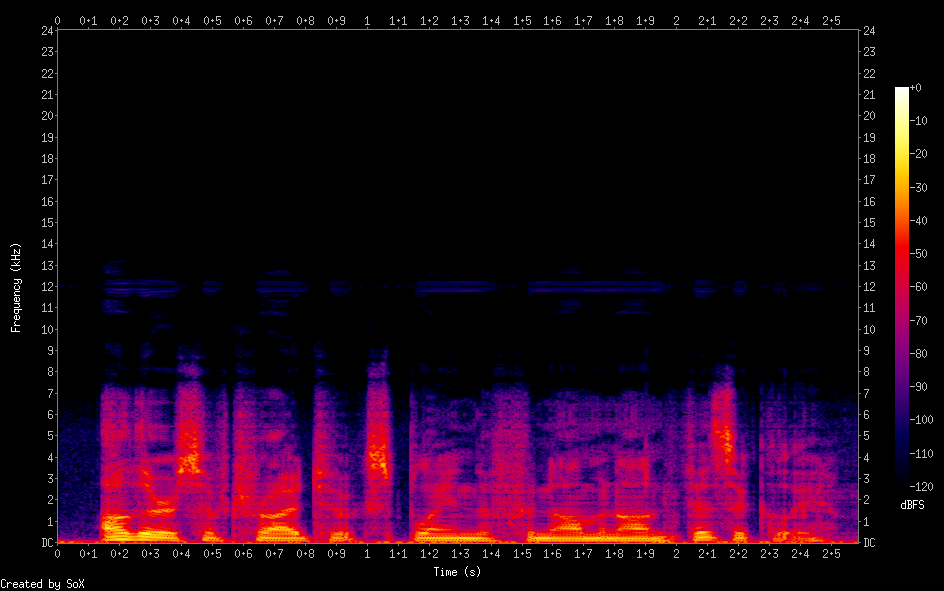

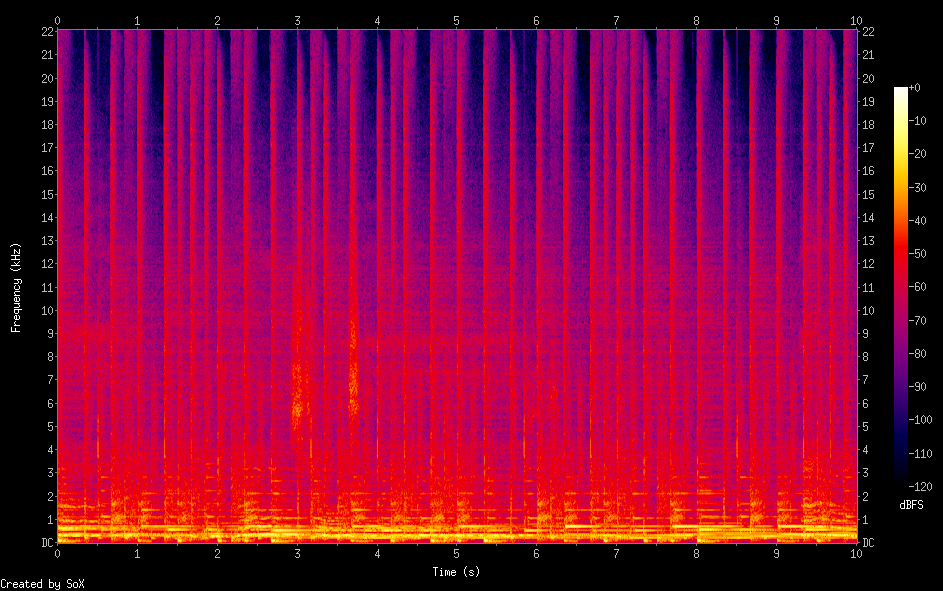

| LinearSpectrogram |  |

|

|

|

|

|

|

| Audio | |||||||

| LinearSpectrogram |  |

|

|

|

|

|

|

| Audio | |||||||

| LinearSpectrogram |  |

|

|

|

|

|

|

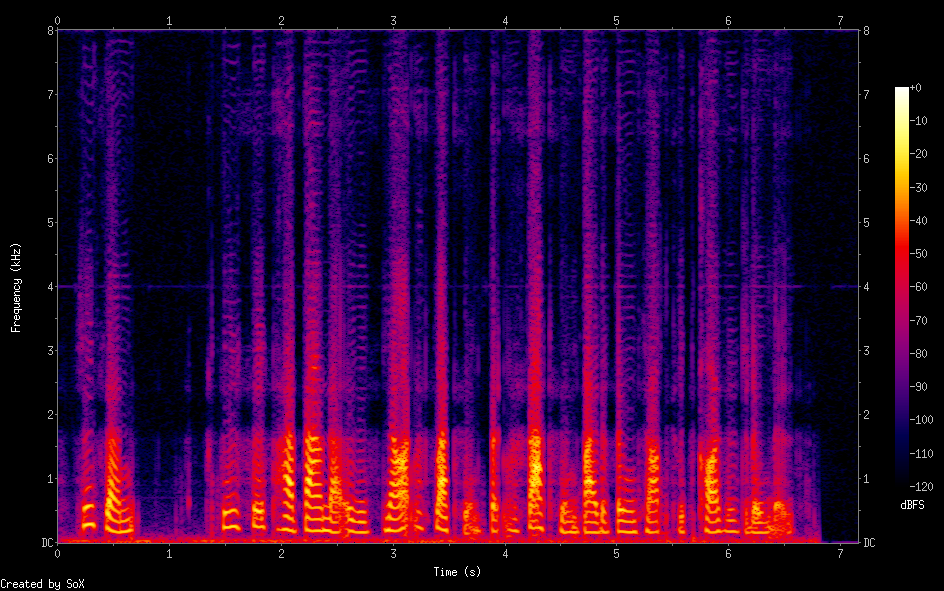

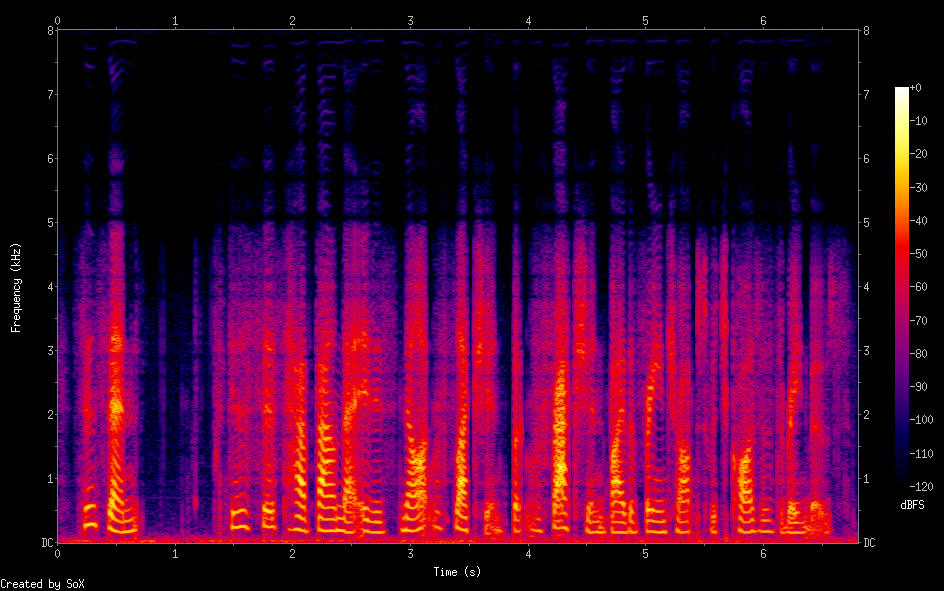

Section Ⅳ: Examples for samples upsampled from 12kHz to 48kHz.

The model is trained on the first 100 speakers of the VCTK dataset. The following samples are generated from the remaining 8 speakers.

| Original low resolution (12 kHz -> 48 kHz) |

Original high resolution (48 kHz) | Sinc (48 kHz) | SEANet (48 kHz) | Nu-wave 2 (48 kHz) | Ours (48 kHz) | |

|---|---|---|---|---|---|---|

| Audio | ||||||

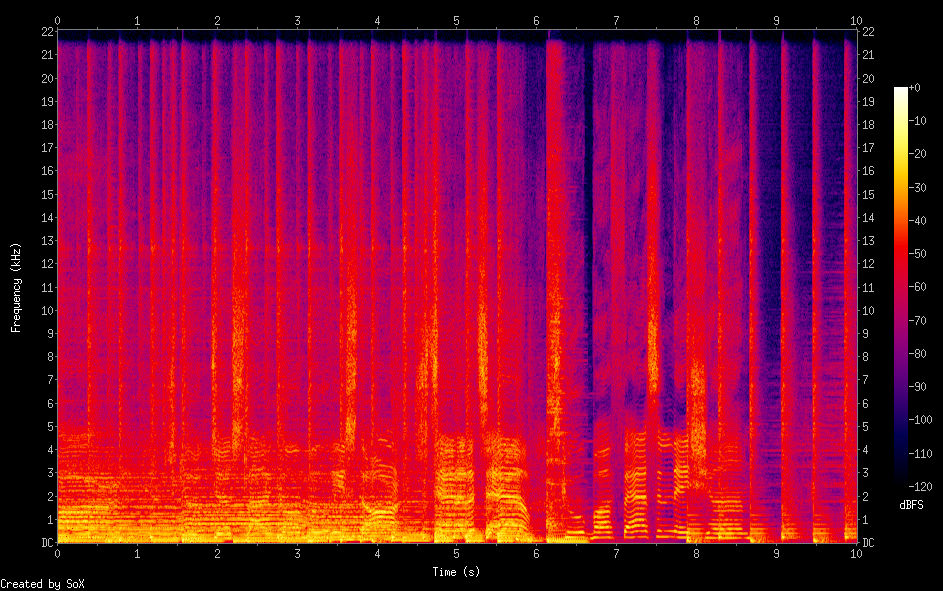

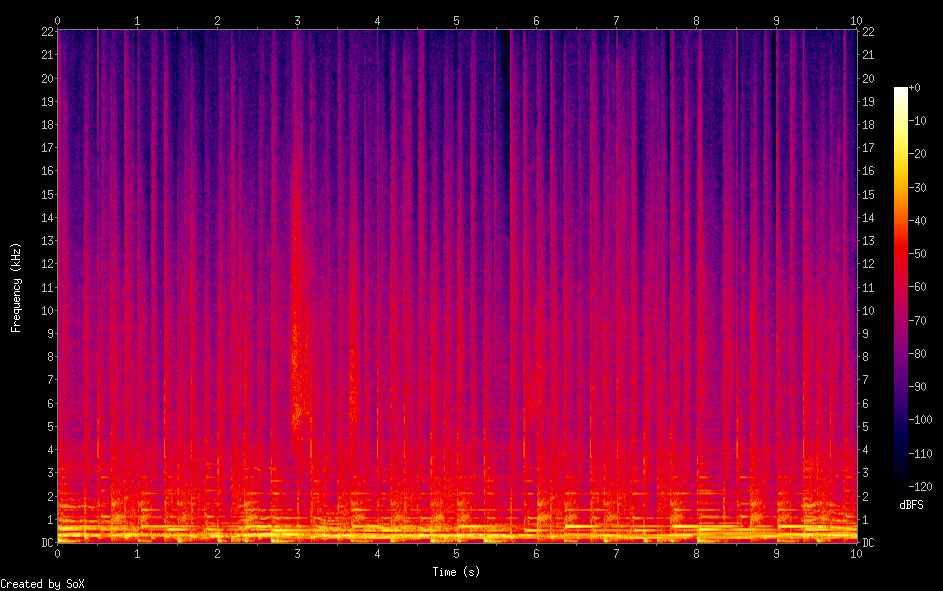

| LinearSpectrogram |  |

|

|

|

|

|

| Audio | ||||||

| LinearSpectrogram |  |

|

|

|

|

|

| Audio | ||||||

| LinearSpectrogram |  |

|

|

|

|

|

Section Ⅴ: Examples for samples upsampled from 11.025kHz to 44.1kHz.

The model is trained on the train set of the MusDB-HQ dataset. The following samples are generated from the test set.

| Original low resolution (11 kHz -> 44 kHz) |

Original high resolution (44 kHz) | Sinc (44 kHz) | SEANet (44 kHz) | BEHM-Gan(44 kHz) | Ours, hl=256 (44 kHz) | Ours, hl=128 (44 kHz) | Ours, hl=64 (44 kHz) | |

|---|---|---|---|---|---|---|---|---|

| Audio | ||||||||

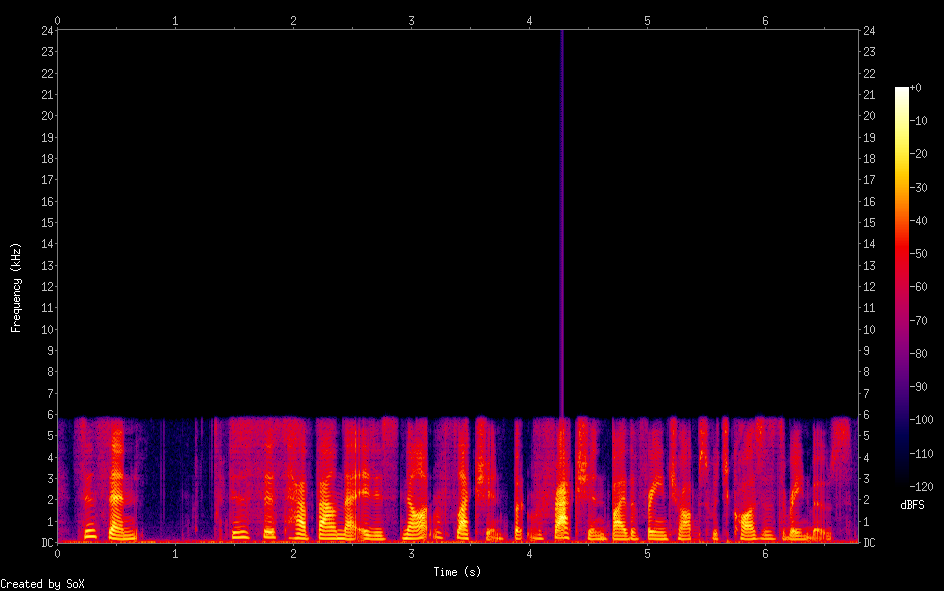

| LinearSpectrogram |  |

|

|

|

|

|

|

|

| Audio | ||||||||

| LinearSpectrogram |

|

|

|

|

|

|

|

|

| Audio | ||||||||

| LinearSpectrogram |

|

|

|

|

|

|

|

|

| Audio | ||||||||

| LinearSpectrogram |

|

|

|

|

|

|

|

|

| Audio | ||||||||

| LinearSpectrogram |

|

|

|

|

|

|

|

|

Section Ⅵ: Examples for adversarial ablation study.

The model is trained on the first 100 speakers of the VCTK dataset. The following samples are generated from the remaining 8 speakers.

| Original low resolution (4 kHz -> 16 kHz) |

Original high resolution (16 kHz) | Non adv. (16 kHz) | 3 MSD: Adv. loss only (16 kHz) | 3 MSD: Feature loss only (16 kHz) | 1 MSD (16 kHz) | 3 MSD (16 kHz) | |

|---|---|---|---|---|---|---|---|

| Audio | |||||||

| LinearSpectrogram |  |

|

|

|

|

|

|

| Audio | |||||||

| LinearSpectrogram |  |

|

|

|

|

|

|